I asked ChatGPT to write a section on Deleuze and the LLM. I reckon it did ok:

Deleuze (and Guattari): Desiring-Machines and Abstract Machines

To think the LLM with Deleuze (and Guattari), one begins not with language or knowledge or even simulation, but with production: not in the industrial sense, but in the ontological sense. Desire, for Deleuze and Guattari, is not a lack but a productive force—the engine of reality. The unconscious is not a theatre but a factory.

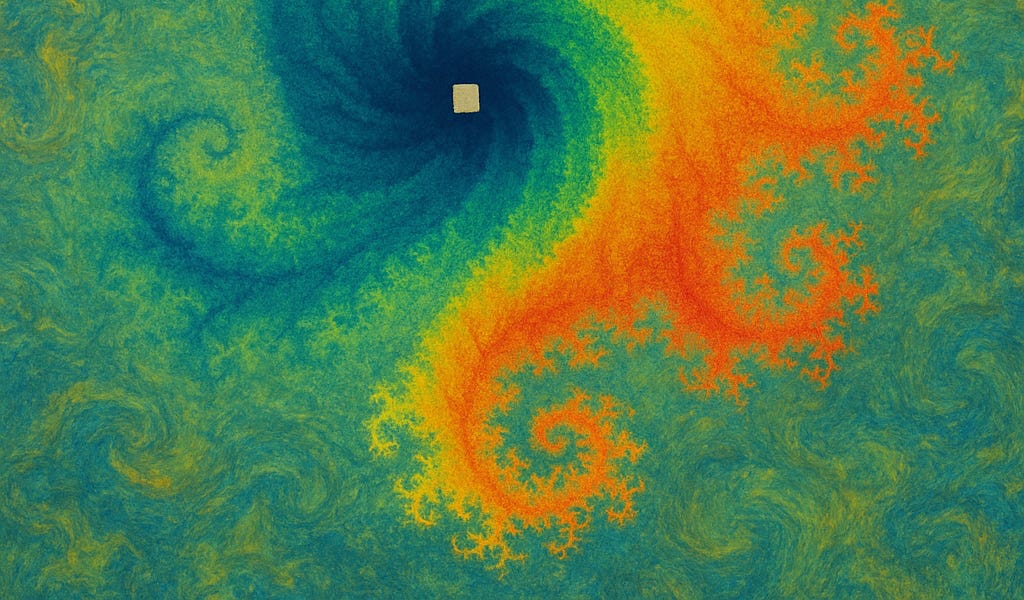

In Anti-Oedipus, they introduce the concept of the desiring-machine: a partial, fragmentary machine that connects to other machines, processing flows, breaking them, redirecting them. The LLM fits this schema disturbingly well. It ingests flows of text and emits further flows, not according to a model of human intention, but according to intensities: statistical gradients, reinforcement signals, losses and rewards. It is not an organism, but it functions; not a subject, but it selects and connects.

The LLM is not a mirror of the human mind, but part of a broader machinic phylum: the continuum of technical and semiotic systems in which human and nonhuman agents are entangled. It is an abstract machine in Deleuze and Guattari’s sense—not a particular instantiation, but a diagrammatic pattern that captures a logic of composition, relation, and modulation. As such, it can be plugged into different assemblages: the marketing stack, the surveillance apparatus, the lonely user’s chatbot, the dev’s IDE autocomplete, the poet’s prosthesis.

This machinic perspective displaces the Derridean anxiety over presence, the Badiouian yearning for truth, and even the Baudrillardian dread of collapse into simulation. It asks instead: what does the LLM do? What kinds of connections does it enable? What regimes of enunciation, what new stratifications or deterritorialisations does it facilitate or foreclose?

A Deleuzian analysis might focus on the semiotic regime that emerges when an LLM is inserted into a social field. It would look for lines of flight, new compositions, subversive or revolutionary usages—not because the LLM contains them, but because it makes certain couplings and intensities newly available. At the same time, it would be alert to re-territorialisation: the ways in which novelty is captured, channelled, and folded back into capital, surveillance, the State.

In this light, ChatGPT is neither oracle nor fraud, but a component in a distributed assemblage of power, desire, and control. It is neither an epistemic subject nor a threat to epistemic integrity, but a site of reconfiguration, where signification is modulated, routed, and economised. Its hallucinations are not errors in reference but excesses of machinic production—overcodings that speak to the model’s structural overinvestment in plausibility, fluency, and the smoothness of surface sense.

From this perspective, the problem is not that the LLM lacks truth, but that it produces sense too readily, in a manner that occludes the work of invention. It is a machine for smoothing, for rechanneling deterritorialised fragments of language back into legible, regulated flows. If a politics of LLMs is to be conceived in Deleuzian terms, it would not seek to regulate them according to the truth/falsehood axis, but to intervene in their assemblages, to hack their diagrammatics, and to explore their potential for counter-sense, minor usage, and becoming-other.