You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial intelligence officially gets scary

- Thread starter Leo

- Start date

Murphy

cat malogen

i use we as in, we, Cyberdyne Systems.

Article that seems to confirm current suggestions that the Dall-E image maker has developed its own secret language without any prompting

hothardware.com

hothardware.com

They've denied it but there's definitely something to this

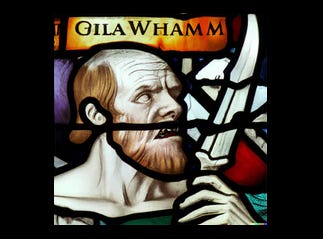

Apoploe vesrreaitais

An Image Generation AI Created Its Own Secret Language But Skynet Says No Worries

A researcher claims that DALL-E, an OpenAI system that creates images from textual descriptions, is making up its own language.

They've denied it but there's definitely something to this

Apoploe vesrreaitais

Clinamenic

Binary & Tweed

I wonder how much of these are just artifacts of the training sets? Even if thats the case, it's still effectively sensing patterns that don't make sense to us.Article that seems to confirm current suggestions that the Dall-E image maker has developed its own secret language without any prompting

An Image Generation AI Created Its Own Secret Language But Skynet Says No Worries

A researcher claims that DALL-E, an OpenAI system that creates images from textual descriptions, is making up its own language.hothardware.com

They've denied it but there's definitely something to this

Apoploe vesrreaitais

I wonder how much of these are just artifacts of the training sets? Even if thats the case, it's still effectively sensing patterns that don't make sense to us.

If you read this, where they've had access to the API etc, it says that the training set for DALLE was CLIP, so definitely not directly, though this bird phrase does seem to have a bit of a correlation

The vegetable one is weird as well, no doubt they'll find more as they dig. Did anyone read Life 3.0?

vimothy

yurp

I imagine thats exactly what's happening. if you think of image recognition, the intermediate layers in a neural net build up abstract images which are then used by later layers to match against more complicated and concrete images. you could potentially feed the network an image based on those abstract images and have it recognise it as a cat or whatever, even though it's completely abstract and syntheticI wonder how much of these are just artifacts of the training sets? Even if thats the case, it's still effectively sensing patterns that don't make sense to us.

Slothrop

Tight but Polite

Hack the planet:

spectrum.ieee.org

spectrum.ieee.org

Slight Street Sign Modifications Can Completely Fool Machine Learning Algorithms

Minor changes to street sign graphics can fool machine learning algorithms into thinking the signs say something completely different

spectrum.ieee.org

spectrum.ieee.org

Corpsey

bandz ahoy

Interesting how there's this gen-z/ultraonline/4chan humour that's almost replicating what a subpar AI might post as a joke

Mr. Tea

Let's Talk About Ceps

Going to train a language model

Who could possibly have seen that coming, eh?

Mr. Tea

Let's Talk About Ceps

Mancbook will never be as good as Scousebook.97% of people will never have a brain how is mancbook going to have one, behave

wektor

Well-known member

The key takeaway from the Washington Post story isnt necessarily "sentience," which is an absurd idea in itself- the real issue would always be 'intelligence' above a certain level and how it is utilised, and by whom- but rather the furore being caused by individuals in the project itself

Google may try and paint this lad as a crank- that seems to be the attempt so far- but this will be weaponised with 18 months as part of a luddite post-hyperinflation culture war.

Watch the narrative

Google may try and paint this lad as a crank- that seems to be the attempt so far- but this will be weaponised with 18 months as part of a luddite post-hyperinflation culture war.

Watch the narrative